Only show images of the current year.

X-Mas Cards

Every year a christmas card showing aspects of our research projects is produced and sent out.VRVis Competence Center

The VRVis K1 Research Center is the leading application oriented research center in the area of virtual reality (VR) and visualization (Vis) in Austria and is internationally recognized. You can find extensive Information about the VRVis-Center here

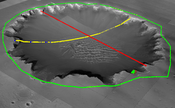

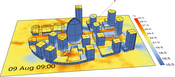

Smart Communities and Technologies: 3D Spatialization

The Research Cluster "Smart Communities and Technologies" (Smart CT) at TU Wien will provide the scientific underpinnings for next-generation complex smart city and communities infrastructures. Cities are ever-evolving, complex cyber physical systems of systems covering a magnitude of different areas. The initial concept of smart cities and communities started with cities utilizing communication technologies to deliver services to their citizens and evolved to using information technology to be smarter and more efficient about the utilization of their resources. In recent years however, information technology has changed significantly, and with it the resources and areas addressable by a smart city have broadened considerably. They now cover areas like smart buildings, smart products and production, smart traffic systems and roads, autonomous driving, smart grids for managing energy hubs and electric car utilization or urban environmental systems research.

3D spatialization creates the link between the internet of cities infrastructure and the actual 3D world in which a city is embedded in order to perform advanced computation and visualization tasks. Sensors, actuators and users are embedded in a complex 3D environment that is constantly changing. Acquiring, modeling and visualizing this dynamic 3D environment are the challenges we need to face using methods from Visual Computing and Computer Graphics. 3D Spatialization aims to make a city aware of its 3D environment, allowing it to perform spatial reasoning to solve problems like visibility, accessibility, lighting, and energy efficiency.

Advanced Computational Design

Toward Optimal Path Guiding for Photorealistic Rendering

Instant Visualization and Interaction for Large Point Clouds

Point clouds are a quintessential 3D geometry representation format, and often the first model obtained from reconstructive efforts, such as LIDAR scans. IVILPC aims for fast, authentic, interactive, and high-quality processing of such point-based data sets. Our project explores high-performance software rendering routines for various point-based primitives, such as point sprites, gaussian splats, surfels, and particle systems. Beyond conventional use cases, point cloud rendering also forms a key component of point-based machine learning methods and novel-view synthesis, where performance is paramount. We will exploit the flexibility and processing power of cutting-edge GPU architecture features to formulate novel, high-performance rendering approaches. The envisioned solutions will be applicable to unstructured point clouds for instant rendering of billions of points. Our research targets minimally-invasive compression, culling methods, and level-of-detail techniques for point-based rendering to deliver high performance and quality on-demand. We explore GPU-accelerated editing of point clouds, as well as common display issues on next-generation display devices. IVILPC lays the foundation for interaction with large point clouds in conventional and immersive environments. Its goal is an efficient data knowledge transfer from sensor to user, with a wide range of use cases to image-based rendering, virtual reality (VR) technology, architecture, the geospatial industry, and cultural heritage.

Modeling the World at Scale

Vision: reconstruct a model of the world that permits online level-of-detail extraction.Joint Human-Machine Data Exploration

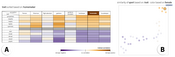

Wider research context

In many domains, such as biology, chemistry, medicine, and the humanities, large amounts of data exist. Visual exploratory analysis of these data is often not practicable due to their size and their unstructured nature. Traditional machine learning (ML) requires large-scale labeled training data and a clear target definition, which is typically not available when exploring unknown data. For such large-scale, unstructured, open-ended, and domain-specific problems, we need an interactive approach combining the strengths of ML and human analytical skills into a unified process that helps users to "detect the expected and discover the unexpected".

Hypotheses

We hypothesize that humans and machines can learn jointly from the data and from each other during exploratory data analysis. We further hypothesize that this joint learning enables a new visual analytics approach that reveals how users' incrementally growing insights fit the data, which will foster questioning and reframing.

Approach

We integrate interactive ML and interactive visualization to learn about data and from data in a joint fashion. To this end, we propose a data-agnostic joint human-machine data exploration (JDE) framework that supports users in the exploratory analysis and the discovery of meaningful structures in the data. In contrast to existing approaches, we investigate data exploration from a new perspective that focuses on the discovery and definition of complex structural information from the data rather than primarily on the model (as in ML) or on the data itself (as in visualization).

Innovation

First, the conceptual framework of JDE introduces a novel knowledge modeling approach for visual analytics based on interactive ML that incrementally captures potentially complex, yet interpretable concepts that users expect or have learned from the data. Second, it proposes an intelligent agent that elicits information fitting the users' expectations and discovers what may be unexpected for the users. Third, it relies on a new visualization approach focusing on how the large-scale data fits the users' knowledge and expectations, rather than solely the data. Fourth, this leads to novel exploratory data analysis techniques -- an interactive interplay between knowledge externalization, machine-guided data inspection, questioning, and reframing.

Primary researchers involved

The project is a joint collaboration between researchers from TU Wien (Manuela Waldner) and the University of Applied Sciences St. Pölten (Matthias Zeppelzauer), Austria, who contribute and join their complementary expertise on information visualization, visual analytics, and interactive ML.

FWF Stand-alone project P 36453

Photogrammetry made easy

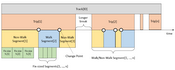

Superhumans - Walking Through Walls

In recent years, virtual and augmented reality have gained widespread attention because of newly developed head-mounted displays. For the first time, mass-market penetration seems plausible. Also, range sensors are on the verge of being integrated into smartphones, evidenced by prototypes such as the Google Tango device, making ubiquitous on-line acquisition of 3D data a possibility. The combination of these two technologies – displays and sensors – promises applications where users can directly be immersed into an experience of 3D data that was just captured live. However, the captured data needs to be processed and structured before being displayed. For example, sensor noise needs to be removed, normals need to be estimated for local surface reconstruction, etc. The challenge is that these operations involve a large amount of data, and in order to ensure a lag-free user experience, they need to be performed in real time, i.e., in just a few milliseconds per frame. In this proposal, we exploit the fact that dynamic point clouds captured in real time are often only relevant for display and interaction in the current frame and inside the current view frustum. In particular, we propose a new view-dependent data structure that permits efficient connectivity creation and traversal of unstructured data, which will speed up surface recovery, e.g. for collision detection. Classifying occlusions comes at no extra cost, which will allow quick access to occluded layers in the current view. This enables new methods to explore and manipulate dynamic 3D scenes, overcoming interaction methods that rely on physics-based metaphors like walking or flying, lifting interaction with 3D environments to a “superhuman” level.

Advanced Visual and Geometric Computing for 3D Capture, Display, and Fabrication

This Marie-Curie project creates a leading European-wide doctoral college for research in Advanced Visual and GeometricComputing for 3D Capture, Display, and Fabrication.