Information

- Publication Type: Master Thesis

- Workgroup(s)/Project(s):

- Date: November 2015

- Date (Start): 28. February 2014

- Date (End): 12. November 2015

- TU Wien Library:

- Diploma Examination: 12. November 2015

- First Supervisor:

- Keywords: virtual reality, stereo rendering

Abstract

In this thesis we discuss the use of omnidirectional stereo (omnistereo) rendering of virtual

environments. We present an artefact-free technique to render omnistereo images for

the CAVE in real time using the modern rendering pipeline and GPU-based tessellation.

Depth perception in stereoscopic images is enabled through the horizontal disparities

seen by the left and right eye. Conventional stereoscopic rendering, using off-axis

or toe-in projections, provides correct depth cues in the entire field of view (FOV) for

a single view-direction. Omnistereo panorama images, created from captures of the real

world, provide stereo depth cues in all view direction. This concept has been adopted for

rendering, as several techniques generating omnistereo images based on virtual environments

have been presented. This is especially relevant in the context of surround-screen

displays, as stereo depth can be provided for all view directions in a 360° panorama

simultaneously for upright positioned viewers. Omnistereo rendering also lifts the need

for view-direction tracking, since the projection is independent of the view direction,

unlike stereoscopic projections. However, omnistereo images only provide correct depth

cues in the center of the FOV. Stereo disparity distortion errors occur in the periphery

of the view and worsen with distance from the center of the view. Nevertheless, due

to a number of properties of the human visual system, these errors are not necessarily

noticeable.

We improved the existing object-warp based omnistereo rendering technique for

CAVE display systems by preceding it with screen-space adaptive tessellation methods.

Our improved technique creates images without perceivable artefacts and runs on

the GPU at real-time frame rates. The artefacts produced by the original technique

without tessellation are described by us. Tessellation is used to remedy edge curvature

and texture interpolation artefacts occurring at large polygons, due to the non-linearity

of the omnistereo perspective. The original approach is based on off-axis projections.

We showed that on-axis projections can be used as basis as well, leading to identical

images. In addition, we created a technique to efficiently render omnistereo skyboxes

for the CAVE using a pre-tessellated full-screen mesh. We implemented the techniques

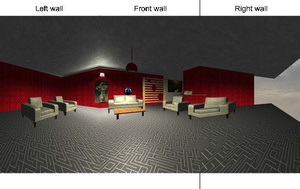

as part of an application for a three-walled CAVE in the VRVis research center and

compared them.

Additional Files and Images

Additional images and videos

Additional files

Weblinks

No further information available.

BibTeX

@mastersthesis{MEINDL-2015-OSR,

title = "Omnidirectional Stereo Rendering of Virtual Environments",

author = "Lukas Meindl",

year = "2015",

abstract = "In this thesis we discuss the use of omnidirectional stereo

(omnistereo) rendering of virtual environments. We present

an artefact-free technique to render omnistereo images for

the CAVE in real time using the modern rendering pipeline

and GPU-based tessellation. Depth perception in stereoscopic

images is enabled through the horizontal disparities seen by

the left and right eye. Conventional stereoscopic rendering,

using off-axis or toe-in projections, provides correct depth

cues in the entire field of view (FOV) for a single

view-direction. Omnistereo panorama images, created from

captures of the real world, provide stereo depth cues in all

view direction. This concept has been adopted for rendering,

as several techniques generating omnistereo images based on

virtual environments have been presented. This is especially

relevant in the context of surround-screen displays, as

stereo depth can be provided for all view directions in a

360° panorama simultaneously for upright positioned

viewers. Omnistereo rendering also lifts the need for

view-direction tracking, since the projection is independent

of the view direction, unlike stereoscopic projections.

However, omnistereo images only provide correct depth cues

in the center of the FOV. Stereo disparity distortion errors

occur in the periphery of the view and worsen with distance

from the center of the view. Nevertheless, due to a number

of properties of the human visual system, these errors are

not necessarily noticeable. We improved the existing

object-warp based omnistereo rendering technique for CAVE

display systems by preceding it with screen-space adaptive

tessellation methods. Our improved technique creates images

without perceivable artefacts and runs on the GPU at

real-time frame rates. The artefacts produced by the

original technique without tessellation are described by us.

Tessellation is used to remedy edge curvature and texture

interpolation artefacts occurring at large polygons, due to

the non-linearity of the omnistereo perspective. The

original approach is based on off-axis projections. We

showed that on-axis projections can be used as basis as

well, leading to identical images. In addition, we created a

technique to efficiently render omnistereo skyboxes for the

CAVE using a pre-tessellated full-screen mesh. We

implemented the techniques as part of an application for a

three-walled CAVE in the VRVis research center and compared

them.",

month = nov,

address = "Favoritenstrasse 9-11/E193-02, A-1040 Vienna, Austria",

school = "Institute of Computer Graphics and Algorithms, Vienna

University of Technology ",

keywords = "virtual reality, stereo rendering",

URL = "https://www.cg.tuwien.ac.at/research/publications/2015/MEINDL-2015-OSR/",

}

thesis

thesis