Information

- Publication Type: Journal Paper with Conference Talk

- Workgroup(s)/Project(s):

- Date: October 2016

- Journal: Proceedings of the 18th International ACM SIGACCESS Conference on Computers & Accessibility

- Location: Reno, Nevada, USA

- Lecturer: Andreas Reichinger

- Event: 18th International ACM SIGACCESS Conference on Computers and Accessibility

- Conference date: 23. October 2016 – 26. October 2016

Abstract

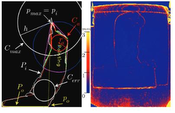

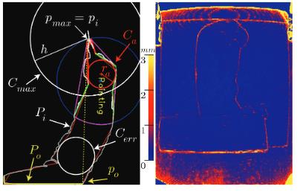

For blind and visually impaired people, tactile reliefs offer many benefits over the more classic raised line drawings or tactile diagrams, as depth, 3D shape and surface textures are directly perceivable. However, without proper guidance some reliefs are still difficult to explore autonomously. In this work, we present a gesture-controlled interactive audio guide (IAG) based on recent low-cost depth cameras that operates directly on relief surfaces. The interactively explorable, location-dependent verbal descriptions promise rapid tactile accessibility to 2.5D spatial information in a home or education setting, to on-line resources, or as a kiosk installation at public places. We present a working prototype, discuss design decisions and present the results of two evaluation sessions with a total of 20 visually impaired test users.Additional Files and Images

Weblinks

No further information available.BibTeX

@article{Reichinger_2016,

title = "Gesture-Based Interactive Audio Guide on Tactile Reliefs",

author = "Andreas Reichinger and Stefan Maierhofer and Anton Fuhrmann

and Werner Purgathofer",

year = "2016",

abstract = "For blind and visually impaired people, tactile reliefs

offer many benefits over the more classic raised line

drawings or tactile diagrams, as depth, 3D shape and

surface textures are directly perceivable. However, without

proper guidance some reliefs are still difficult to explore

autonomously. In this work, we present a gesture-controlled

interactive audio guide (IAG) based on recent low-cost depth

cameras that operates directly on relief surfaces. The

interactively explorable, location-dependent verbal

descriptions promise rapid tactile accessibility to 2.5D

spatial information in a home or education setting, to

on-line resources, or as a kiosk installation at public

places. We present a working prototype, discuss design

decisions and present the results of two evaluation sessions

with a total of 20 visually impaired test users.",

month = oct,

journal = "Proceedings of the 18th International ACM SIGACCESS

Conference on Computers & Accessibility",

URL = "https://www.cg.tuwien.ac.at/research/publications/2016/Reichinger_2016/",

}

image

image Paper

Paper