Information

- Publication Type: Poster

- Workgroup(s)/Project(s):

- Date: August 2018

- Publisher: ACM

- Location: Vancouver, Canada

- ISBN: 978-1-4503-5817-0/18/08

- Event: ACM SIGGRAPH 2018

- DOI: 10.1145/3230744.3230816

- Call for Papers: Call for Paper

- Conference date: 12. August 2018 – 16. August 2018

- Pages: Article 41 –

- Keywords: point based rendering, point cloud, LIDAR

Abstract

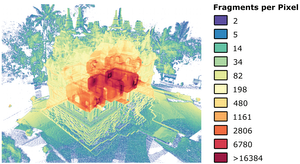

Rendering tens of millions of points in real time usually requires either high-end graphics cards, or the use of spatial acceleration structures. We introduce a method to progressively display as many points as the GPU memory can hold in real time by reprojecting what was visible and randomly adding additional points to uniformly converge towards the full result within a few frames.Our method heavily limits the number of points that have to be rendered each frame and it converges quickly and in a visually pleasing way, which makes it suitable even for notebooks with low-end GPUs. The data structure consists of a randomly shuffled array of points that is incrementally generated on-the-fly while points are being loaded.

Due to this, it can be used to directly view point clouds in common sequential formats such as LAS or LAZ while they are being loaded and without the need to generate spatial acceleration structures in advance, as long as the data fits into GPU memory.

Additional Files and Images

Weblinks

BibTeX

@misc{schuetz-2018-PPC,

title = "Progressive Real-Time Rendering of Unprocessed Point Clouds",

author = "Markus Sch\"{u}tz and Michael Wimmer",

year = "2018",

abstract = "Rendering tens of millions of points in real time usually

requires either high-end graphics cards, or the use of

spatial acceleration structures. We introduce a method to

progressively display as many points as the GPU memory can

hold in real time by reprojecting what was visible and

randomly adding additional points to uniformly converge

towards the full result within a few frames. Our method

heavily limits the number of points that have to be rendered

each frame and it converges quickly and in a visually

pleasing way, which makes it suitable even for notebooks

with low-end GPUs. The data structure consists of a

randomly shuffled array of points that is incrementally

generated on-the-fly while points are being loaded. Due

to this, it can be used to directly view point clouds in

common sequential formats such as LAS or LAZ while they are

being loaded and without the need to generate spatial

acceleration structures in advance, as long as the data fits

into GPU memory.",

month = aug,

publisher = "ACM",

location = "Vancouver, Canada",

isbn = "978-1-4503-5817-0/18/08",

event = "ACM SIGGRAPH 2018",

doi = "10.1145/3230744.3230816",

Conference date = "Poster presented at ACM SIGGRAPH 2018

(2018-08-12--2018-08-16)",

note = "Article 41--",

pages = "Article 41 – ",

keywords = "point based rendering, point cloud, LIDAR",

URL = "https://www.cg.tuwien.ac.at/research/publications/2018/schuetz-2018-PPC/",

}

abstract

abstract poster

poster