Information

- Publication Type: Other Reviewed Publication

- Workgroup(s)/Project(s):

- Date: 2019

- Booktitle: Proceedings of the Workshop on Visualization for AI explainability (VISxAI)

- Editor: El-Assady, Mennatallah and Chau, Duen Horng (Polo) and Hohman, Fred and Perer, Adam and Strobelt, Hendrik and Viégas, Fernanda

- Location: Vancouver

- Event: Workshop on Visualization for AI explainability (VISxAI) at IEEE VIS 2019

Abstract

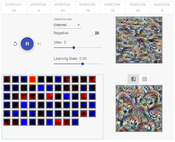

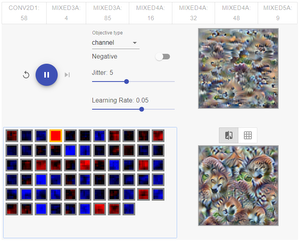

Excellent explanations of feature visualization already exist in the form of interactive articles, e.g. DeepDream, Feature Visualization, The Building Blocks of Interpretability, Activation Atlas, Visualizing GoogLeNet Classes. They mostly rely on curated prerendered visualizations, additionally providing colab notebooks or public repositories allowing the reader to reproduce those results. While precalculated visualizations have many advantages (directability, more processing budget), they are always discretized samples of a continuous parameter space. In the spirit of Tensorflow Playground, this project aims at providing a fully interactive interface to some basic functionality of the originally Python-based Lucid library, roughly corresponding to the concepts presented in the “Feature Visualization" article. The user is invited to explore the effect of parameter changes in a playful way and without requiring any knowledge of programming, enabled by an implementation on top of TensorFlow.js. Live updates of the generated input image as well as feature map activations should give the user a visual intuition to the otherwise abstract optimization process. Further, this interface opens the domain of feature visualization to non-experts, as no scripting is required.

Additional Files and Images

Additional images and videos

Additional files

Weblinks

BibTeX

@inproceedings{sietzen-ifv-2019,

title = "Interactive Feature Visualization in the Browser",

author = "Stefan Sietzen and Manuela Waldner",

year = "2019",

abstract = "Excellent explanations of feature visualization already

exist in the form of interactive articles, e.g. DeepDream,

Feature Visualization, The Building Blocks of

Interpretability, Activation Atlas, Visualizing GoogLeNet

Classes. They mostly rely on curated prerendered

visualizations, additionally providing colab notebooks or

public repositories allowing the reader to reproduce those

results. While precalculated visualizations have many

advantages (directability, more processing budget), they are

always discretized samples of a continuous parameter space.

In the spirit of Tensorflow Playground, this project aims at

providing a fully interactive interface to some basic

functionality of the originally Python-based Lucid library,

roughly corresponding to the concepts presented in the

“Feature Visualization" article. The user is invited to

explore the effect of parameter changes in a playful way and

without requiring any knowledge of programming, enabled by

an implementation on top of TensorFlow.js. Live updates of

the generated input image as well as feature map activations

should give the user a visual intuition to the otherwise

abstract optimization process. Further, this interface opens

the domain of feature visualization to non-experts, as no

scripting is required.",

month = oct,

booktitle = "Proceedings of the Workshop on Visualization for AI

explainability (VISxAI)",

editor = "El-Assady, Mennatallah and Chau, Duen Horng (Polo) and

Hohman, Fred and Perer, Adam and Strobelt, Hendrik and

Vi\'{e}gas, Fernanda",

location = "Vancouver",

event = "Workshop on Visualization for AI explainability (VISxAI) at

IEEE VIS 2019",

URL = "https://www.cg.tuwien.ac.at/research/publications/2019/sietzen-ifv-2019/",

}

video

video