Information

- Publication Type: Bachelor Thesis

- Workgroup(s)/Project(s): not specified

- Date: March 2025

- Date (Start): 1. September 2024

- Date (End): 1. March 2025

- Matrikelnummer: 01429490

- First Supervisor: Martin Ilčík

Abstract

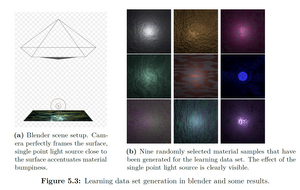

I explore the possibility of generating procedural Blender shaders of self-similar semiregular surfaces from an input image derived from photograph or digital render. The resulting Blender shader should produce a Material with a look similar to the input image. This would be useful because procedural materials give 3D artists flexibility and creative control over the materials look, that image based materials cannot, while inheriting all the benefits of said image based materials, like availability and ease of use. This shader generation is done by a neural parameter predictor and subsequent extraction of a sub-shader from a given, pre-built, general shader graph (the super-shader). The parameters are predicted by a MLP neural network using statistical texture descriptors as its input. Those descriptors are extracted from a pre-trained image classification CNN, which uses the original photograph/render as its input. Using this pipeline a large learning dataset for the MLP can be easily artificially generated with Blender by rendering the super-shader with randomized parameters.Additional Files and Images

Weblinks

No further information available.BibTeX

@bachelorsthesis{winklmueller-2025-ims,

title = "Inverse Material Synthesis via Sub-Shader Extraction",

author = "Marcel Winklm\"{u}ller",

year = "2025",

abstract = "I explore the possibility of generating procedural Blender

shaders of self-similar semiregular surfaces from an input

image derived from photograph or digital render. The

resulting Blender shader should produce a Material with a

look similar to the input image. This would be useful

because procedural materials give 3D artists flexibility and

creative control over the materials look, that image based

materials cannot, while inheriting all the benefits of said

image based materials, like availability and ease of use.

This shader generation is done by a neural parameter

predictor and subsequent extraction of a sub-shader from a

given, pre-built, general shader graph (the super-shader).

The parameters are predicted by a MLP neural network using

statistical texture descriptors as its input. Those

descriptors are extracted from a pre-trained image

classification CNN, which uses the original

photograph/render as its input. Using this pipeline a large

learning dataset for the MLP can be easily artificially

generated with Blender by rendering the super-shader with

randomized parameters.",

month = mar,

address = "Favoritenstrasse 9-11/E193-02, A-1040 Vienna, Austria",

school = "Research Unit of Computer Graphics, Institute of Visual

Computing and Human-Centered Technology, Faculty of

Informatics, TU Wien ",

URL = "https://www.cg.tuwien.ac.at/research/publications/2025/winklmueller-2025-ims/",

}