Motivation

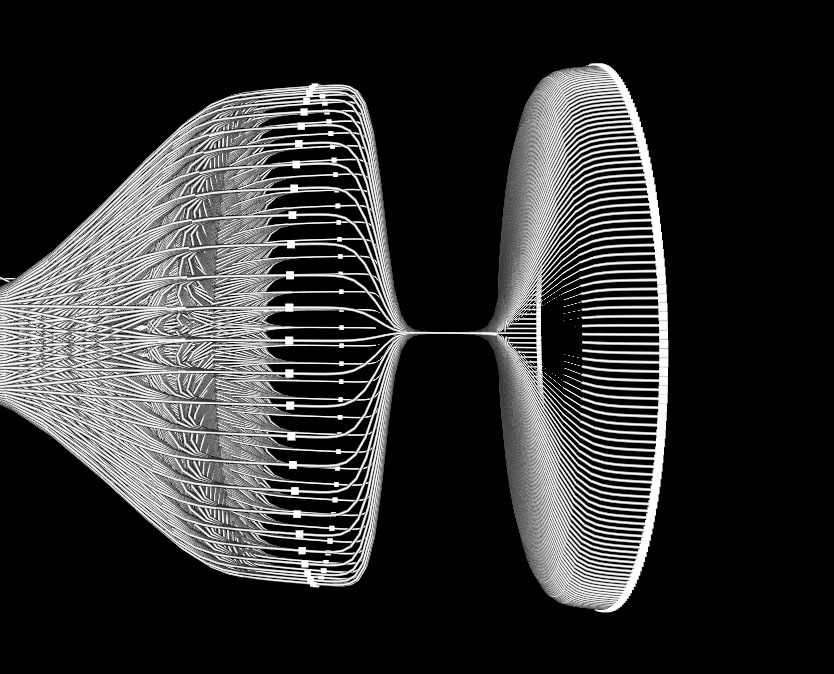

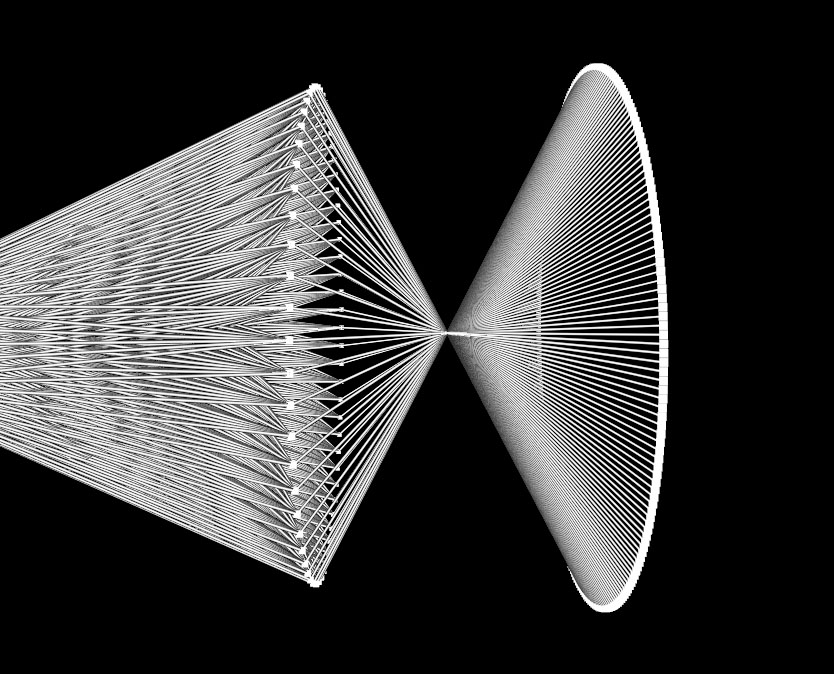

While spending time to study neural networks mainly last summer, often I was faced with the difficulty of being able to understand how convolutional layers are connected with each other, but also to different types of layers. When a fully connected layer is connected to another fully connected layer or to an output layer, it is quite simple to understand that for each possible pair of nodes there exists one weighted edge. With convolutional layers the situation is a bit different, as weights are shared among several neighbourhoods of nodes, but also because there more dimensions to deal with: In a fully connected layer, the shape of the weight matrix is two dimensional [n_input, n_output], while a convolutional layer has a four dimensional shape of [kernelwidth, kernelheight, inputdepth, outputdepth].

This four dimensional connectivity allows for unusual calculations such as a 1x1 convolution, which sounds absurd to a person used to dealing with image processing, but is perfectly valid in the four dimensional connectivity of a convolutional layer: We just connect each input pixel to the pixel at the same 2 dimensional position in all of the output feature maps, thereby creating a set of fully connected layers each dedicated to a spacial location. When teaching such concepts, it is not easy to confuse the listener, especially without a clear visual representation. Although there exist a number of different types of schematic views, most are too simplified and focus only on certain aspects of the layers, that allow a broad overview of the architecture but are still unintuitive for beginners.

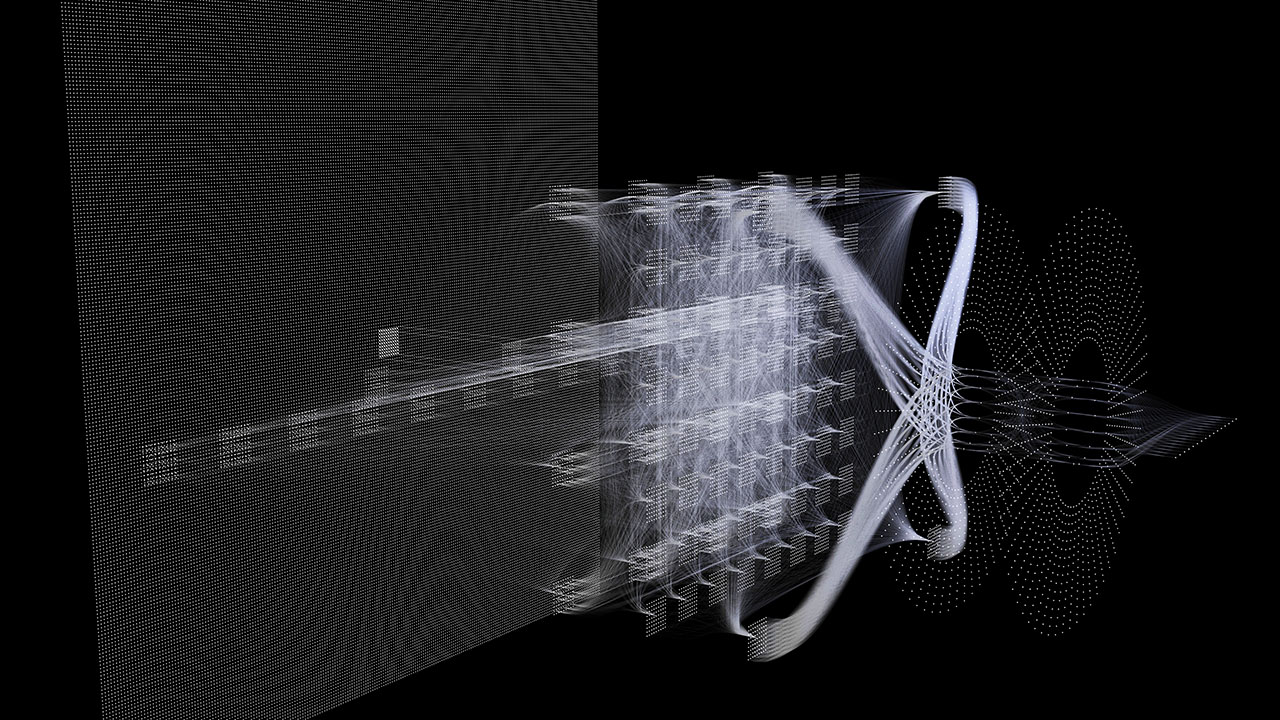

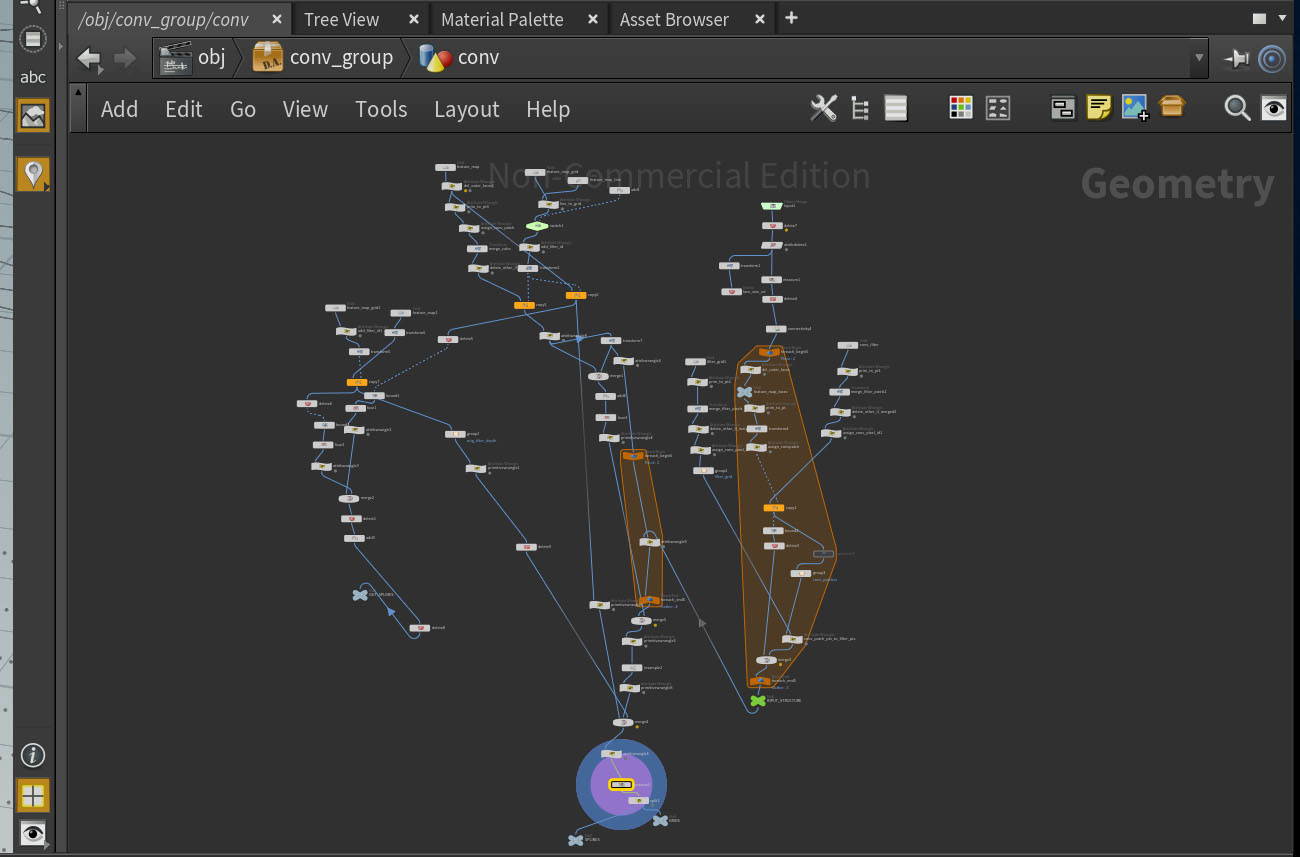

My goal was therefore, to make a tool that allows someone trying to explain CNNs to interactively expand and reduce, reshape, recombine etc… a number of CNN components in full 3D, to be able to show the different aspects of a CNN with good visual representation.