Motivation

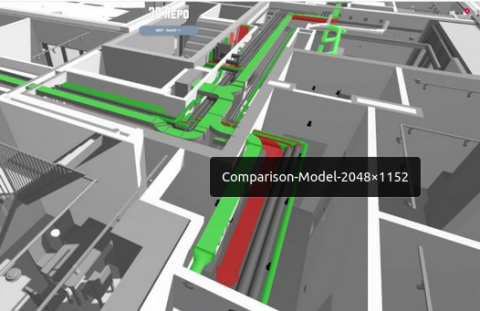

If we have an existing 3D scan/model of a scene, we want to point our smartphone there and see any changes as an augmented reality overlay immediately.

For this, we detect which objects have been removed/added or simply moved around. In many environments, it is useful to observe such changes, e.g. for inventarization of offices, warehouses, or urban spaces. Furthermore, this information can be used to train deep learning algorithms for the semantic understanding of such environments.

Description

Tasks (extent depends on PR/BA/DA)

- Smartphone app prototype that registers a point cloud (from RGB using depth estimation with machine learning, e.g., LiteDepth https://arxiv.org/abs/2209.00961) to a previously scanned point cloud

- Based on a modified Infinitam (infinitam.org) implementation which uses Kinect 3D scanner to compare an existing scan in real-time with a live scan.

- Store view frusta of sensor (the seen space) in a flattened data structure for differentiating seen changes from the unknown: https://www.cg.tuwien.ac.at/research/publications/2014/Radwan-2014-CDR/

- Extend the comparison between octree nodes between the old and new model, based on the permitted distance (points + uncertainty ellipsoids), to classify into changed/unchanged geometry

- Evaluate the robustness of the algorithm by using a Blensor virtual scan of a ground truth 3D model

- Classify changed objects with machine learning

- Track whether added and removed objects are the same object

- Fast parallel implementation in CUDA and compare run-time to the state-of-the-art

- Estimate volume change (using surface reconstruction and voxel difference)

- Visualize changes over a time series

Requirements

C++ programming skills and interest in geometry processing. Experience in geometry processing and 3D data structures such as octrees, point clouds, machine learning, or CUDA will speed up the development tasks.

Environment

Platform-independent C++.

A bonus of €500/1,000 if completed to satisfaction within an agreed time-frame of 6/12 months (PR/BA or DA).